“It is not because you can build it that you should build it,” explains Jeff Gothelf, author of the excellent book Sense and Respond. Before spending time, energy, and money in an idea… test it first. Learn from the market. It is the concept behind Jeff’s book as well as Lean Startup and Growth Hacking.

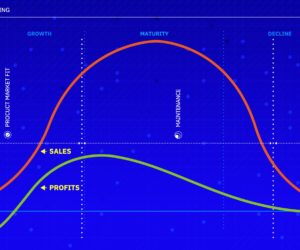

In my blog post, Connecting the dots: four steps to imagine, build, test, and grow your digital venture while minimizing risk, I explain at which stage of the product life cycle, in our opinion, are lean and growth hacking useful. Lean starts with the prototype, then the MVP (Minimum Viable Product), that we prefer to call MVO (Minimum Viable Outcome), and the Product Market Fit (PMF). When you reach PMF, the time to scale, growth hacking is your friend.

In both cases, we suggest testing your assumptions before you scale any of them. We call those tests MVTs for Minimum Viable Tests. Start with a hypothesis. Make sure it is measurable (KPIs) and that you have goals (OKRs). Analyze and further iterate. We are using this approach for our Lean sprints and Growth sprints. Only the purposes of the MVTs are different.

Breaking down an idea into short tests

The goal of the test is to reduce the risk. If you fail, you want to fail small and fast. So the art is to take an idea and to break it down in a brief initial hypothesis to have a sense of how the market is going to respond.

Let’s say I am a publisher. I am convinced that my digital audience wants to have the print experience online. To do so, I am asking my team to build a PDF reader for people to leaf through it. How do you test the concept before you build it? Do they need and value it?

So you build an MVT. You add a button next to each article, promoting the PDF reader. When users click on it, they get a pop-up window with a message along the line: “We are currently building the PDF reader. If you want to become a VIP tester, please leave your email and click submit.”.

The ratio of the number of page views to the number of clicks on the button will give you a sense of appetite. Where the ratio of the number of clicks on the button to the number of people submitting their email will provide an understanding of their motivation.

Defining your Minimum Viable Test

Any of those tests will probably be managed by a PO (Product Owner) or a Growth Master. Also, when you capture them, you need to be crystal clear. Your Minimum Viable Test will be the basis for several user stories. You want to make sure that they are as straightforward as possible.

How do you build an MVT? We follow the suggestions of Sean Ellis, in his book (another must-read) Hacking Growth, to create tests. By the way, he does not call them MVTs. So don’t look for the acronym in his book.

This time you are a B2B online insurance broker targeting SMBs. Your idea is to decrease the insurance policy cost to increase your monthly sales quota. But, it is a big undertaking since you need to convince insurance companies to reduce the coverage to lower the policy cost.

The first step is to capture the hypothesis:

- TITLE — Give a simple, descriptive, and short informative title. No fancy name. Something that anybody can understand right away. “20% discount NYC barbershop FB campaign.”

- WHO? — Whom are you targeting with your MVTs? Again be precise. You are conducting a test—no need for a broad audience. For our online insurance broker, “who?” could be: 1000 barbershops in NYC.

- WHAT? — What are you going to do? PPC campaign. In our example: we will run an ad where we offer a 20% policy discount.

- WHERE? — On Facebook.

- WHEN? — The “when” is always a bit tricky. It can be the duration of a campaign. It can be related to a specific event (mothers’ day) or triggered by a particular action (if the target audience does X, then Y happens). It can entail several criteria. In the case of our online insurance broker, we are going to test for two weeks (duration of the campaign).

- HOW? — Here I like to describe “what” and “who” is involved in the making of the MVT. It will help with the scoring of your MVT. In our example (FB campaign), I will need a budget ($1,000/week), a copy editor to write the copy (1 hour), a graphic designer to create a series of visuals (1 hour), and then a campaign manager to set up and monitor the campaign (8 hours).

- WHY? — What is your hypothesis? What are you trying to prove? What is your objective? I like to write it in the following way (keeping the broker example): “My objective is to demonstrate that if we reduce the cost of the insurance policy by 20%, we are going to increase monthly sales by 50%.”

Measuring your Minimum Viable Test

If you can’t measure it, you can’t test it. In other words, you can’t accept a test in your backlog if there is not a straightforward way to evaluate it, don’t waste time, money, and energy. MVTs are a great way to eliminate vague ideas and impossible to measure hypotheses. In other words, the first ROI of an MVT is to save you cash right away. It can be much cash. I have helped a few of our clients save on the order of magnitude of 7 figures.

So the second step of the MVT is to capture two types of data: KPIs (Key Performance Indicators) and KRs (Key Results).

Keeping the broker example of increasing policy sales, the KRs could be:

- Increase CTR by 80%

- Increase weekly calls with prospects by 50%

- Increase weekly sales by 50%

KPIs are:

- # of impressions

- Cost per click

- Cost per mille/thousand (CPM)

- Click-through rate (CTR)

- # of prospects completing the contact form

- # of calls

- # of policy offers sent

- # of contracts

Prioritizing your Minimum Viable Tests

Now you have your tests. Which one do you launch first? There are different ways to score MVTs. Sean Ellis, in Growth Hacking, proposes the ICE score. At Pentalog, we created the DICET score. Again it is one option. You can find others. As long as you have a logical and consistent way to measure your MVTs, you are OK.

The DICET score is an extension of the ICE score.

- D for dollars or revenue generated.

- I for impact.

- C for confidence.

- E for ease.

- T for Time-to-Money

Each of them is ranked on a scale of 1 to 10. One being the worst and ten the best. One of the specificities of Sean’s method is to have the author of the MVT scoring it. Often, people object that it is biased or pure guesswork. For sure, there is some bias and some guessing. But at the end of the day, it does not matter. Do you have a better way to measure, besides just the opinion of some people, the “hippo” (highest paid person’s opinion)?

So going back to the online insurance B2B broker for SMBs:

- D (dollars) — We like to provide a $ amount to help the scoring. What is the average weekly sales? Let’s say $100K (100 policies sold at $1K each). To make it simple, we can say that 10 = $100K and so, 1 = $10K. If you think your MVTs are going to generate $40K, then your score is 4.

- I (impact) — How much of an impact do you think your hypothesis is going to have on the business? In our case, reducing by 20% insurance policy price will increase sales by 50%. Pretty impactful if it works. So, you probably want to put between 9 and 10.

- C (confidence) — How much do you believe that your MVT is going to prove your hypothesis and help achieve your Key Results (KRs)? In our example, do you think the results of your FB campaigns are going to show the KRs you are looking for? The more confidence you have, the higher your score. Let’s say you are very confident about the FB broker campaign and put 8.

- E (Ease) — The Ease score comprises three components. Team: do you have the resources to run your test; are they available? Cost: how expensive is it to do this campaign? Data: how fast are you going to get data and to learn? I like to score each of them separately and then to divide by 3. For example, for our FB campaign, team will be 10 (I have them and they are available), cost will be a 7 (not expensive but not exactly cheap), and data would be a 10 (data will come very fast). Score 27/3 = 9.

- T (Time-to-Money) — Here again, we like to give a sense of timing. Usually, we give a 5 to the average closing time for a deal. So if it is 30 days. 5 = 30. For the sake of our example, let’s put 5.

So for our broker FB campaign, the score will be D=4, I=9, C=8, E=9, and T=5. Final score = 7.

When organizing the backlog, do you follow the scoring? Usually, after quickly checking the assumptions, asking for help for some of them when you are not clear, the answer is “yes.” If not, why score? Now could you have an emergency? Probably. But how do you know it is an emergency? How do you know the learning is going to be more valuable than another MVT. Not easy. So flexibility is essential. Agility is key. But, don’t do it to the point where scoring does not make any sense anymore.

Proposing Minimum Viable Tests

Who proposes MVTs? There are two questions encapsulated in this one. Can anybody in the team suggest an MVT? Ideas are everywhere. Anybody can have great suggestions. So we recommend that you let anybody in the team propose MVTs. Can a developer write a marketing or a UX MVT and vice-versa? We also suggest “yes.” Collective intelligence seems to work.

Do you need a specific tool to write MVTs? The answer is “no.” We use Google forms and Google spreadsheets. There is a new tool on the market that seems very promising, but we have not yet tested it adequately: Ducalis.io

Is it necessary to do a group session to generate MVTs? It’s not. Anybody, at any time, is supposed to be able to push an MVT in the MVT backlog. That said, we recommend to do MVT jams once a month to make sure you keep the backlog full. We have a seminar on how to do a Growth Jam (we just changed the name to MVT jam).

(6 votes, average: 4.33 out of 5)

(6 votes, average: 4.33 out of 5)