Today’s cybersecurity threat landscape is in a constant state of flux. With more employees working remotely and human error being one of the top threat vectors, identity and access management architecture a security priority.

Identity and access management (IAM) is one of those security functions that many of us take for granted. But when we do so, we miss an opportunity to create a highly effective security perimeter based on user authentication.

IAM is the discipline that ensures the right people have access to the right things at the right time. Done well, IAM can prevent data breaches and limit how far a hacker can penetrate your network if an attack succeeds.

Pentalog takes data security very seriously, and as of the second quarter of 2021, we have standardized our IAM architecture. Going forward, Pentalog’s default authentication and authorization policies and processes will leverage current IAM products and best practices. Clients who want a different approach can ask us to review requests that differ from this approach.

To better illustrate why the Pentalog team is prioritizing IAM architecture standards, we have compiled background information on authentication, tips for evaluating serverless identity providers and what to expect from Pentalog’s IAM standardization.

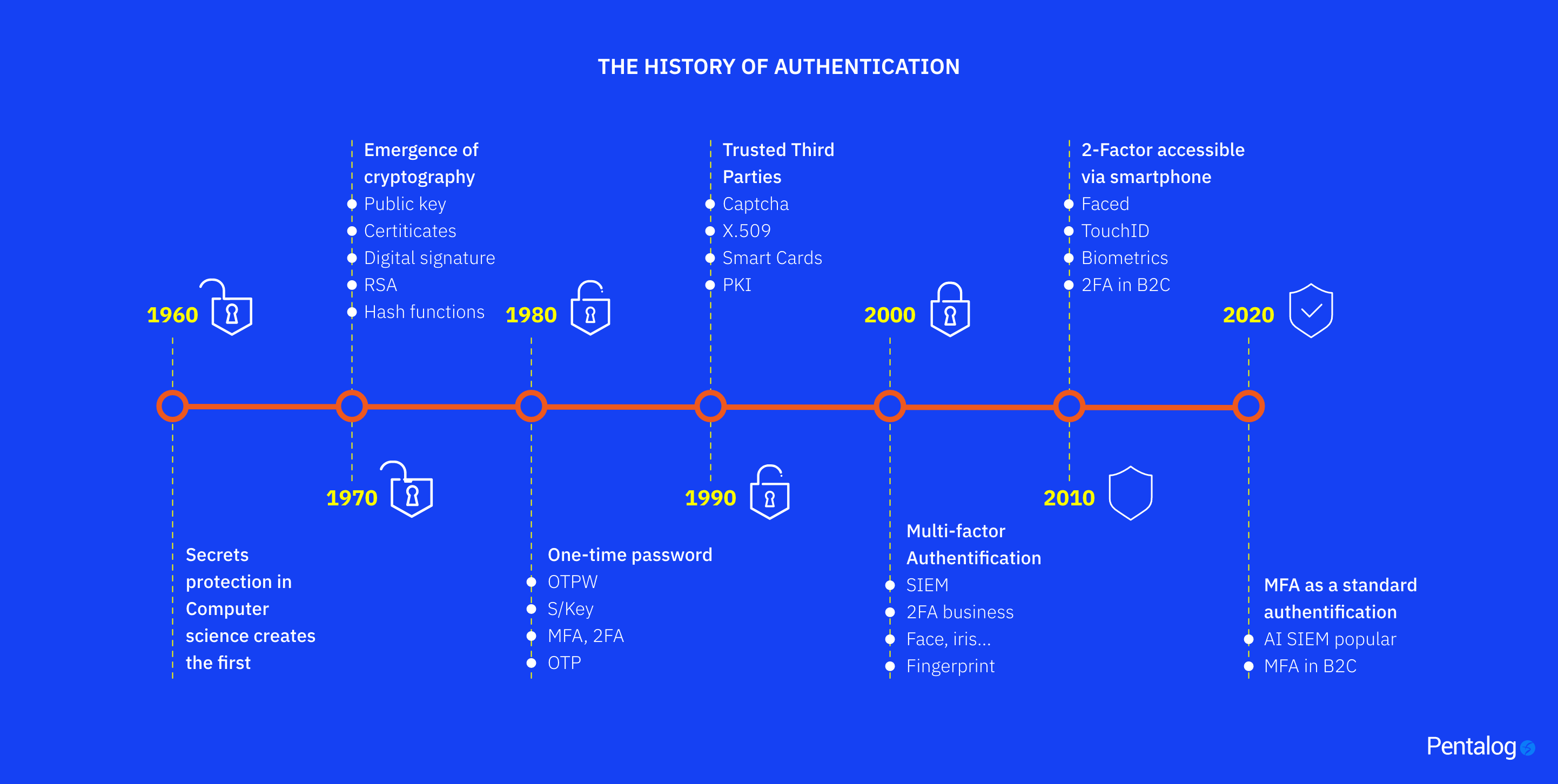

A Brief History of Authentication

1960s

During the ‘60s, information security became an important computer science discipline. Although security teams didn’t have to worry about internet vulnerabilities at that time, security was password-centric, and if the system was accessed by an unauthorized user, it was fairly easy to extract the passwords from the file or database.

1970s

In the ‘70s, the access problem was solved with a cryptographic concept called hash function, which enabled password verification without actually storing the password itself. This mechanism was sufficient for the time, but it was still vulnerable to basic brute force attempts.

Later, scientists concerned with securing the data being transferred developed public key cryptography. This technology was primarily used for government infrastructures; however, it was the catalyst for digital certificates, signatures and RSA asymmetric key algorithms.

1980s

During the ‘80s, attackers could still guess, steal or intercept passwords with ease. Therefore, a new concept emerged called one-time passwords, which later led to protocols such as OAuth. The basic idea was to create passwords that couldn’t be predicted but could still be validated.

These passwords were delivered to users through specific hardware or special communication channels. Today, we call this two-factor authentication (2FA) and multifactor authentication (MFA).

1990s

Public key cryptography was a very powerful concept that led to questions about the confidence in the issuer of keys. During the ‘90s, trusted third parties that issue such keys or digital certificates became commercially available.

2000s to today

By 2000, 2FA was popular in enterprises, and “more than one factor” became the standard for authentication.

It took another 10 years for the smartphone industry to bring 2FA to the consumer level. At this point, 2FA didn’t require specialized hardware — a simple text message was good enough.

Behind the scenes, tremendous innovation brought by the development of big data and machine learning enabled AI security information and event management tooling.

Today, MFA is the standard in authentication for consumers, and AI security information and event management (SIEM) has become more popular.

The authentication landscape is much more complex than the streamlined description above. In fact, authentication changes so rapidly that it is almost impossible for developers to stay up to date with the emerging concepts required in their software development jobs.

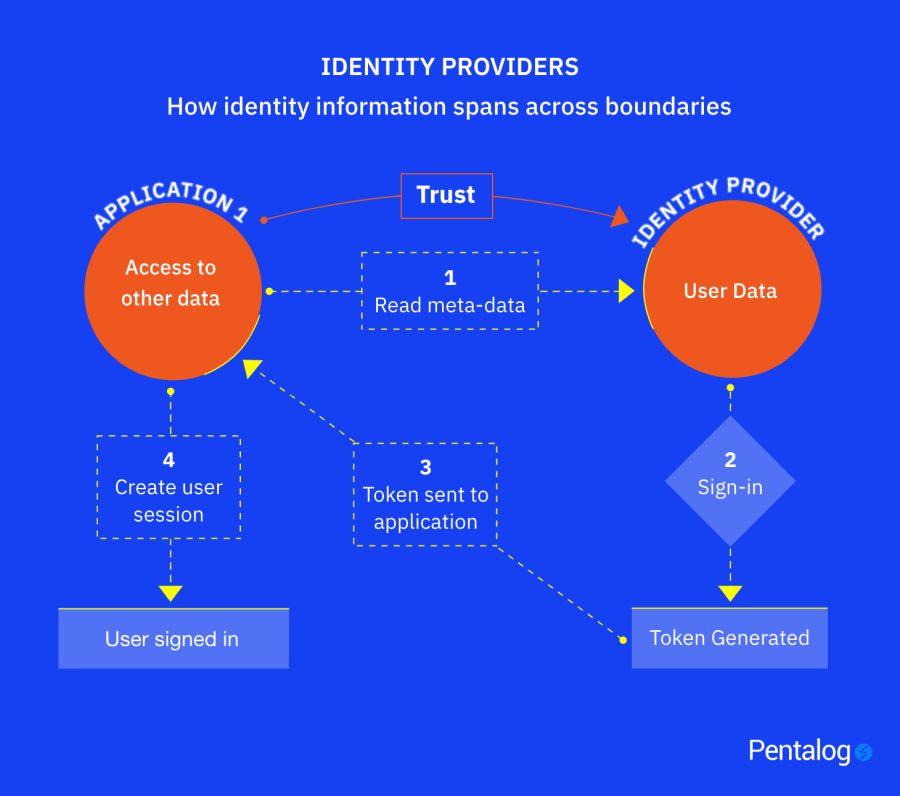

Serverless Identity Providers

The timeline above does not include other large and highly sophisticated fields of infrastructure and data, and it barely scratches the surface of security knowledge. Selecting an authentication solution is a high-risk initiative, so the decision-making process should involve both developers and security and infrastructure experts.

To this end, we recommend that our customers do not reinvent the wheel and instead consider an industry-proven identity and access management solution.

In the past two years, most of the user services implemented by Pentalog were based on the Microsoft Active Directory family of products or Amazon Cognito.

Of course, our customers are free to use whatever product makes the most sense for them in their context, whether that’s Microsoft, Amazon, Okta, Ping Identity or another IAM solutions provider.

These services will become an integral part of your organization’s infrastructure, so be sure to evaluate compliance, backup strategy, environment management, specific permissions and downtime policies during the selection process.

What to Expect from Standardization

We feel standardizing IAM is necessary to ensure consistency among default solution architectures that we propose to our customers. This standard emerged from close collaboration between customers and Pentalog’s technology office, customer DevOps, infrastructure and security departments.

Timelines

When implementing one of the solutions mentioned above for a new application requiring basic user sign-in and access control, expect between 5-10 days of DevOps. Refactoring after a couple of months of go-live might mean more effort than the energy needed for the product development to that point.

Some sophisticated authorization flows, even for new applications, can require 2-3 months of development, configuration and testing. Although this is the exception, if the solution was not designed from the start as it should be, refactoring could be estimated in quarters.

Exceptions

Requests to deviate from IAM standard req will be reviewed by our technology office with subsequent validation from the security team and formal validation from the customer traced in our collaboration history.

It’s a new era for businesses that’s driven by digital technology and supported by a highly distributed workforce. Today’s security threats evolve and change rapidly, making it difficult to maintain an effective security perimeter.

Identity and access management solutions help keep data and networks secure by ensuring only authorized users can access company resources. Pentalog is committed to helping customers achieve this by standardizing IAM architectures across the board.